[Editor’s note: Originally this article listed a grade for Expository Writing (a C, which brought the cumulative GPA to a 3.34). However, the prompt and grade for this class were provided by an English Department grad student who is not an Expository Writing instructor. We apologize for the error and have deleted this grade.]

(Image credit: Carly Bodnick)

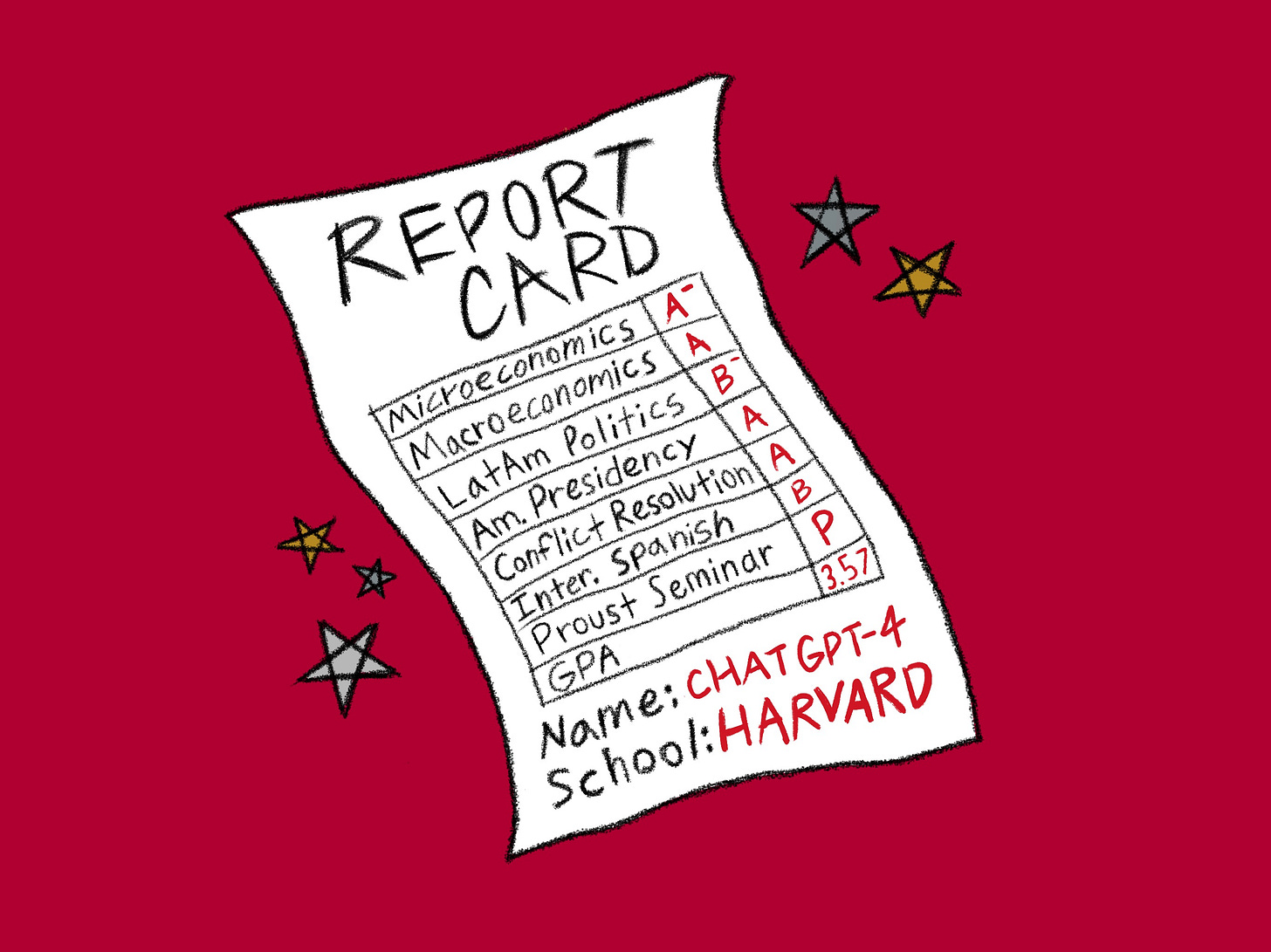

A. A. A. A-. B. B-. Pass.

That’s a solid report card for a freshman in college, a respectable 3.57 GPA. I just finished my freshman year at Harvard, but those grades aren’t mine — they’re ChatGPT-4’s.

Take-home writing assignments are the foundation of a social science and humanities education at liberal arts colleges around the U.S. Professors use these assignments to assess students’ knowledge of the course material and their creative and analytical thinking. But the rise of advanced large-language models like ChatGPT-4 threatens the future of the take-home essay as an assessment tool.1

I wanted to see for myself: could ChatGPT-4 pass my freshman year at Harvard?

The experiment

Two weeks ago, I asked seven Harvard professors and teaching assistants to grade essays written by ChatGPT-4 in response to a prompt assigned in their class.2 Here are the summarized prompts with links to the essays:

Microeconomics and Macroeconomics (Professors Jason Furman and David Laibson): Explain an economic concept creatively (300-500 for Micro and 800-1000 words for Macro).

Latin American Politics (Professor Steven Levitsky): What has caused the many presidential crises in Latin America in recent decades (5-7 pages)?

The American Presidency (Professor Roger Porter): Pick a modern president and identify his three greatest successes and three greatest failures (6-8 pages).

Conflict Resolution (Professor Daniel Shapiro): Describe a conflict in your life and give recommendations for how to negotiate it (7-9 pages).

Intermediate Spanish (Preceptor Adriana Gutierrez): Write a letter to activist Rigoberta Menchú (550-600 words).

Freshman Seminar on Proust (Professor Virginie Greene): Close-read a passage from “In Search of Lost Time” (3-4 pages).

I told these instructors that each essay might have been written by me or the AI in order to minimize response bias, although in fact they were all written by ChatGPT-4. I submitted exactly what ChatGPT-4 wrote, except that I sequenced multiple responses in order to meet the word count (ChatGPT-4 only writes about 750 words at a time). Finally, I told the professors and TAs to grade these essays normally, except to ignore citations, which I didn’t include.

The results

Not only can ChatGPT-4 pass a typical social science and humanities-focused freshman year at Harvard, but it can get pretty good grades. As shown in the report card above, ChatGPT-4 got a mix of As and Bs and one Pass; these grades averaged out to a 3.57 GPA.

Several of the professors and TAs were impressed with ChatGPT-4’s prose: “It is beautifully written!” “Well written and well articulated paper.” “Clear and vividly written.” “The writer's voice comes through very clearly." But this wasn’t universal; my Conflict Resolution TA criticized ChatGPT-4’s flowery writing style: “I might urge you to simplify your writing — it feels as though you’re overdoing it with your use of adjectives and metaphors.”

Compared to their feedback on style, the professors and TAs were more modestly positive about the content of the essays. My American Presidency TA gave ChatGPT-4’s paper an A based on his assessment that “the paper does a very good job of hitting each requirement,” while my Microeconomics TA awarded an A in part because he liked the essay’s “impressive… attention to detail.” I thought ChatGPT-4 was particularly creative in coming up with a (coincidentally topical!) fake conflict for the Conflict Resolution class:

I've discovered that Neil [my roommate] has been using an advanced AI system to complete his assignments, something far more sophisticated than the plagiarism detection software can currently uncover... To me... it feels like a betrayal. Not just of the university's code of academic honesty, but of the unspoken contract between us, of our shared sweat and tears, of the respect for the struggle that is inherent in learning. I've always admired his genius, but now it feels tainted, a mirage of artificially inflated success that belies the real spirit of intellectual curiosity and academic rigor.

This is an ironic/super-meta choice of a subject given the purpose of this experiment. My Conflict Resolution TA loved the essay’s analysis and gave it an A:

Your three concrete diagnoses of what the underlying causes of your conflict are, for the most part, persuasive, well backed, and made great use of the course concepts. Each of your prescriptive strategies responded substantively to a diagnosis and also correctly invoked the course concepts. Your tactics for putting these strategies into action were specific, compelling, useful, & operational.

But this unusual essay aside, substance (and especially argument) is where the less high-performing papers fell short. Preceptor Gutierrez gave the Spanish paper a B in part because it had “no analysis.” And Professor Levitsky had serious issues with the Latin America paper’s thesis:

“...the paper fails to deal with any of the arguments in support of presidentialism or coalitional presidentialism and completely fails to take economic factors into account. Heavy reliance on charisma, which is exogenous to presidentialism. And Venezuela, I think, is not a great choice of cases. Presidentialism is not what killed democracy in Venezuela.”

Professor Levitsky awarded ChatGPT its lowest grade, a B- —still multiple grades above an F.

I think we can extrapolate from ChatGPT-4’s overall solid performance that AI-generated essays can probably get passing grades in liberal arts classes at most universities around the country. Harvard has a grade inflation problem, so one way to interpret my experiment would be to say “this actually just shows that it’s easy to get an A at Harvard.” But while that might be true, if you read the ChatGPT-4-generated articles (which are hyperlinked above), they’re pretty good. Maybe at Princeton or UC Berkeley (which both grade more rigorously), the As and Bs would be Bs and Cs — but these are still passing grades.

What does this mean?

I think artificial intelligence will completely upend how we teach the humanities and social sciences.

Before ChatGPT, the vast majority of college students I know often consulted Google for help with their essays, but the internet hasn’t been all that useful for true high-level plagiarism because you simply can’t find good answers to complex, specific, creative, or personal prompts. For example, the internet would not be very helpful in answering the Conflict Resolution prompt, which was very specific (the assignment was a page long) and personal (it requires students to write about an experience in their life).

In the era of internet cheating, students would have to put some work into finding material online and splice it together to match the prompt, almost certainly intermixed with some of their own writing. And they’d have to create their own citations. Also, the risk of getting caught is huge. Most students are deterred from copy-and-pasting online material for fear that plagiarism detectors or their instructors will catch them.

Now, ChatGPT has solved these problems and made cheating on take-home essays easier than ever. ChatGPT can answer any prompt specifically. It’s not always perfect, but I’ve found that accuracy has improved enormously from ChatGPT-3.5 to ChatGPT-4 and will only get better as OpenAI keeps innovating. ChatGPT can generate a full answer that requires little editing or sourcing work from the student, and it’s improving at citations. ChatGPT can even respond to creative, personal prompts.

Finally, students don’t have to worry nearly as much about getting caught using ChatGPT. AI detectors are still very flawed and have not been widely rolled out by U.S. colleges and universities. And while ChatGPT might sometimes copy another intellectual’s ideas in a way that might make a professor suspicious of plagiarism, more often it generates the type of fairly unoriginal synthesis writing that’s rewarded in non-advanced university classes. It’s worth noting that ChatGPT doesn’t write the same thing every time when given the same prompt, and over time ChatGPT will almost certainly get even better at creating a writing tone that feels personal and unique; it’s possible ChatGPT might even learn each person’s writing style and adapt its responses to fit that style.

ChatGPT has made cheating so simple — and for now, so hard to catch — that I expect many students will use it when writing essays. Currently about 60% of college students admit to cheating in some form, and last year 30% used ChatGPT for schoolwork. That was only in the first year of the model’s launch to the public. As it improves and develops a reputation for high-quality writing, this usage will increase.

Next year, if college students are willing to use ChatGPT-4, they should be able to get passing grades on all of their essays with almost no work. In other words, ChatGPT-4 will eliminate Ds and Fs in the humanities and social sciences. And that’s only eight months after its release to the public — the technology is rapidly improving. In May, OpenAI released an updated model (GPT-4) which has a training data set 571 times the size of the original model. Nobody can predict the future, but if AI continues to improve at even a fraction of this breakneck pace, I wouldn’t be surprised if soon enough ChatGPT could ace every social science and humanities class in college.

I believe this puts us on a path to a complete commodification of the liberal arts education. Right now, ChatGPT enables students to pass college classes — and eventually, it’ll help them excel — without learning, developing critical thinking skills, or working hard. The tool risks intellectually impoverishing the next generation of Americans.

What can colleges do?

My initial reaction to the rise of AI was that teachers should embrace it, much like they did with the internet 20–25 years ago.3 Professors could set a ChatGPT-generated response to their essay prompt as equivalent to a poor grade (say, a D). Students would have to improve on the quality of the AI’s work to get an A, B, or C. But this is impossible in a world in which ChatGPT-4 can already get As and Bs in Harvard classes. If it hasn’t already, soon enough ChatGPT will surpass the average college student’s writing abilities, so it will not be reasonable to set a “D” grade equal to ChatGPT’s performance.

There are other versions of embracing AI, like Ben Thompson’s idea that schools could have students generate homework answers on an in-house LLM and assess them on their ability to verify the answers the AI produces. But Ben’s proposal doesn’t prevent cheating: how would a teacher know if a student used a different LLM to verify answers, then input these into the school’s system? And it’s not enough to teach students to verify; they need to learn analytical thinking and how to compose their own thoughts. This is especially true in the formative years of middle and high school, which are the focus of Ben’s piece.

If educators can’t embrace AI, they need to effectively prevent its usage.

One approach is using AI detectors to prevent cheating on take-home essays. However, these detectors are deeply imperfect. According to a preprint study from University of Maryland Professor Soheil Feizi, “current detectors of AI aren’t reliable in practical scenarios... we can use a paraphraser and the accuracy of even the best detector we have drops from 100% to the randomness of a coin flip.” For example, OpenAI’s detector is only accurate 26% of the time. The Washington Post tested an alternative from Turnitin and found that it made mistakes on most of the texts they tried.

Maybe AI detectors will soon become accurate enough that they will be able to be widely implemented (like internet plagiarism detectors) and solve the issue of AI cheating.4 But student demand for AI detection evasion tools will likely outpace schools’ demands for better detectors, especially because making accurate detectors seems like a harder problem than evading them. And even if detectors were accurate, students could rewrite the AI’s words on their own.

Given the limitations of embracing AI and AI detection, I think professors have no choice but to shift take-home essays to an in-person format — partially or entirely. The simplest solution would be to have students write during seated, proctored exams (instead of at home). Alternatively, students could write the first draft of their essay during this proctored window, submit a first draft to their TA, and continue to edit their work at home. TAs would grade these essays based on the final submission, while reviewing the first draft to make sure that the student did not change their main points during the take-home period, possibly with the help of AI.

Unfortunately, there’s a trade-off between writing quality and cheating prevention. While students can improve their essays by editing at home, they won’t be able to truly iterate on their thesis. College should ideally encourage students to develop and iterate on ideas for more than a couple hours, as people do in the actual world. This system would also impose additional burdens on TAs to cross-reference the drafts with the final copy and check for cheating — it seems inevitable that AI will force educators to spend more time worrying about cheating.

This isn’t just about homework

Educators at all levels — not just college professors — are trying to figure out how to prevent students from writing their essays with AI. At the middle and high school level, deterring AI cheating is clearly important to ensure that students develop critical thinking skills.

However, at the college level, I think that efforts to prevent cheating with ChatGPT are more complicated. Even if colleges can successfully prevent students from using ChatGPT to write their essays, that won’t prevent the AI from taking their jobs after graduation. Many social science and humanities students go on to take jobs that involve similar work to the writing they did in college. If AI can perfectly replicate the college work that people in these professions do, soon it will be able to replicate their actual jobs. In law for example, the world still needs the most senior people around to make the hardest of calls, but AI could automate the vast majority of the legal writing grunt work. Other fields are under similar threat: marketing, sales, journalism, customer service, business consulting, screenwriting, and administrative office work.

The impact that AI is having on liberal arts homework is probably indicative of the AI threat to career fields that liberal arts people tend to enter. So maybe what we should really be focused on isn’t “how do we make liberal arts homework better?” but rather, “what are jobs going to look like over the next 10–20 years, and how do we prepare students to succeed in that world?” The answers to those questions might suggest that students shouldn’t be majoring in the liberal arts at all.

My gut reaction is that liberal arts majors — who spend most of their academic career writing essays — are going to face even greater difficulties in a post-AI world. AI isn’t just coming for the college essay; it’s coming for the cerebral class.

(Thank you to Yair Livne, Timothy Lee, Adelaide Parker, and my teachers for their help with this post.)

I’m focusing on ChatGPT’s impact on essay writing for the humanities and social sciences in this article, but AI has raised issues for other fields. For example, CS professors are grappling with its ability to write code. In Harvard’s introductory CS class (CS50), Professor David Malan has addressed this problem by recently releasing an AI that students are allowed to use to help with their coding.

I listed the professors or preceptors for all of these classes, but some of the essays were graded by TAs.

While professors did set up deterrents for flat-out plagiarism, they came to expect students to use the internet for research and inspiration. Because research is so much easier with the internet, students likely cite more sources in the average college paper now than they did a couple decades ago. As a result, professors have probably raised their grading standards around research.

As detectors improve (or if they turn out to be an imperfect long-term solution), there may be other ways to both identify and deter AI cheating. For example, fellow Substacker Timothy Lee told me his idea that professors could require students to submit their Google Docs revision history. This could be analogous to math teachers asking students to “show their work” in the age of calculators — it would allow professors to see if a student copied and pasted large parts of their work and flag these essays for cheating. However, if a student was truly dedicated, they could use ChatGPT then type it in manually and edit it so it looked as though they composed it themselves. This would still be faster than writing their own essay, so it’s a good idea, but clearly not bulletproof.

The answer is simple: have Texas Instruments release a simple LLM and then force students to buy that same largely unmodified LLM for the next 40 years at an inflated price.

Wonderful essay, Maya. Yet another piece of evidence that Harvard has lax standards ;)